Interface design in the age of qualiatech: Do you want to be a button?

Posted on 19 July 2024 by

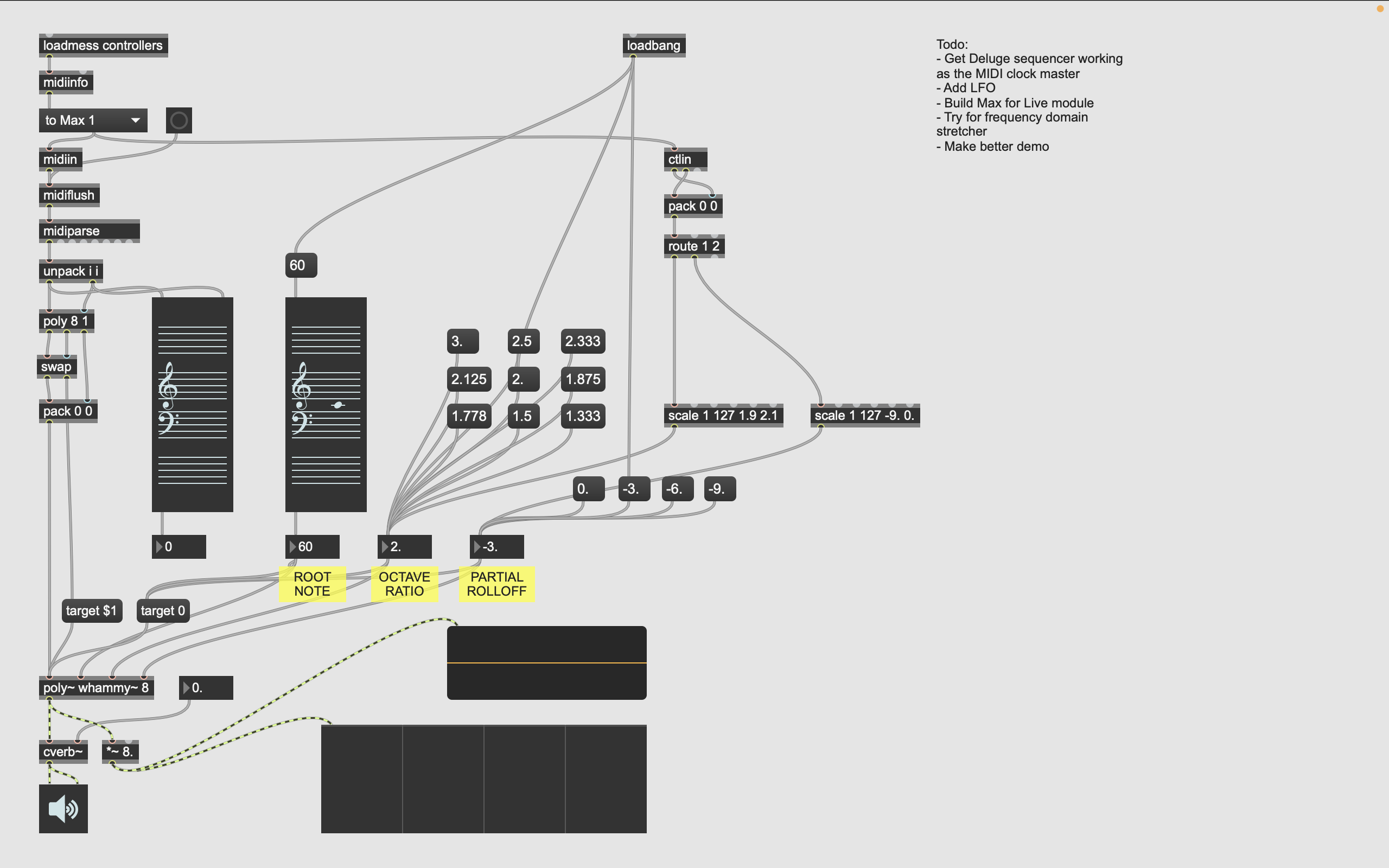

There have been many times in my life that I have felt at one with a computer screen, but one in particular stands out – when I discovered something called Max/MSP during one of my second-year classes at university. Max/MSP is a visual programming language, where instead of writing a program by typing text into a text editor, the programmer manipulates a directed acyclic graph of nodes on a canvas. Generally this is used for novel realtime audio/video production.

This was a stage in my life in which I was living quite close to school, and I would wake up every morning, scarf down a packet of instant noodles and roll into class still only half awake. I’d sit down in the design lab, recline my chair back and draw the 27-inch iMac closer to me until its screen fully encompassed my Cartesian theater. All other sensory modalities diminished until my one limb was the cursor on the screen. I fully inhabited this two-dimensional plane, manipulating raw thoughts and concepts as if they were my own – nodes and encapsulations growing organically and without restraint.

For the first time, I was able to make abstract concepts and algorithms visually representable on a computer screen using actual diagrams and visualisations, rather than lifeless strings of text. Yet, I still felt hamstrung by the knowledge that this clumsy mouse and keyboard based interaction was probably highly sub-optimal for this kind of work, and also by the fact that these elegant constructs I deigned to create upon my screen only existed in reality through many, many (many) intermediate layers of abstraction. For all our advances in hardware, I was no closer to directly implementing my own thoughts in silico than the grandfathers at IBM were in the sixties, pouring their souls instruction by instruction through a 16-bit funnel.

This was the year 2010, and although someone else in the lab was experimenting with a primitive consumer-grade EEG device, viable brain-computer interfaces seemed far in the distant future. Inspired by my experiences working with visual programming languages, I chose to focus my efforts as an interaction designer on alternative programming environments instead. I watched every video Bret Victor ever made, and I lurked in the Future of Coding community, a watering hole for designers and developers with similar interests.

I became something of a disillusioned radical, dissatisfied with our current computing paradigms – which I felt limited our capacity for communication, organisation, and self-expression – and which would require complete overhaul before we ever let them into our heads. I studied functional programming, and learned to think in terms of catamorphisms and anamorphisms, and yet my attention was always drawn back to the final and most interesting unfolding – the one which happens after the photons from the screen hit the cone cells in the retina.

Ten years later, it was the year 2020. Alternative programming paradigms were no closer to hitting the mainstream, and I was not making any progress with my own projects – I got stuck trying to write a type inference system. I think that I was forced to admit that I was far more interested in people than computers, and turned my attention towards neuroscience instead – and that’s where it’s been ever since.

Now, it’s 2024. Virtual reality is just around the corner, and we’re getting closer and closer to read-write access to the brain. I feel the time is right for us to revisit these ideas together.

Classical computing

Many years ago, a friend said to me: Oh, I wish I could just send you a Python object. She was expressing a frustration related to the main problem I have with our current operating systems: Code and data types are not a core part of the operating system as it presents itself to the user. Instead we have these things called files and file formats, and we are forced to store and pass those around instead.

This is a world in which the act of coding is regarded as a secondary, niche function of the operating systems we use every day. Code is not expected to be understood or edited by the end user. Code is regarded with suspicion, and is cordoned off inside “apps” or contained within the sandbox of the web browser in order to protect the user from its potentially malicious activities. The user is not free to interact with the code or underlying data structures on their own terms – the interfaces we use tend to be written in a one-size-fits-all way by a trained elite at a technology company very far away.

If the user does decide to roll up their sleeves and learn to program – they will find themselves constrained to editing text in text files. They might have a choice between various text editors – but they will still find themselves working inside a paradigm which has remained fundamentally unchanged since the sixties. This is disappointing, because the act of coding is perhaps the most sophisticated form of human expression ever devised.

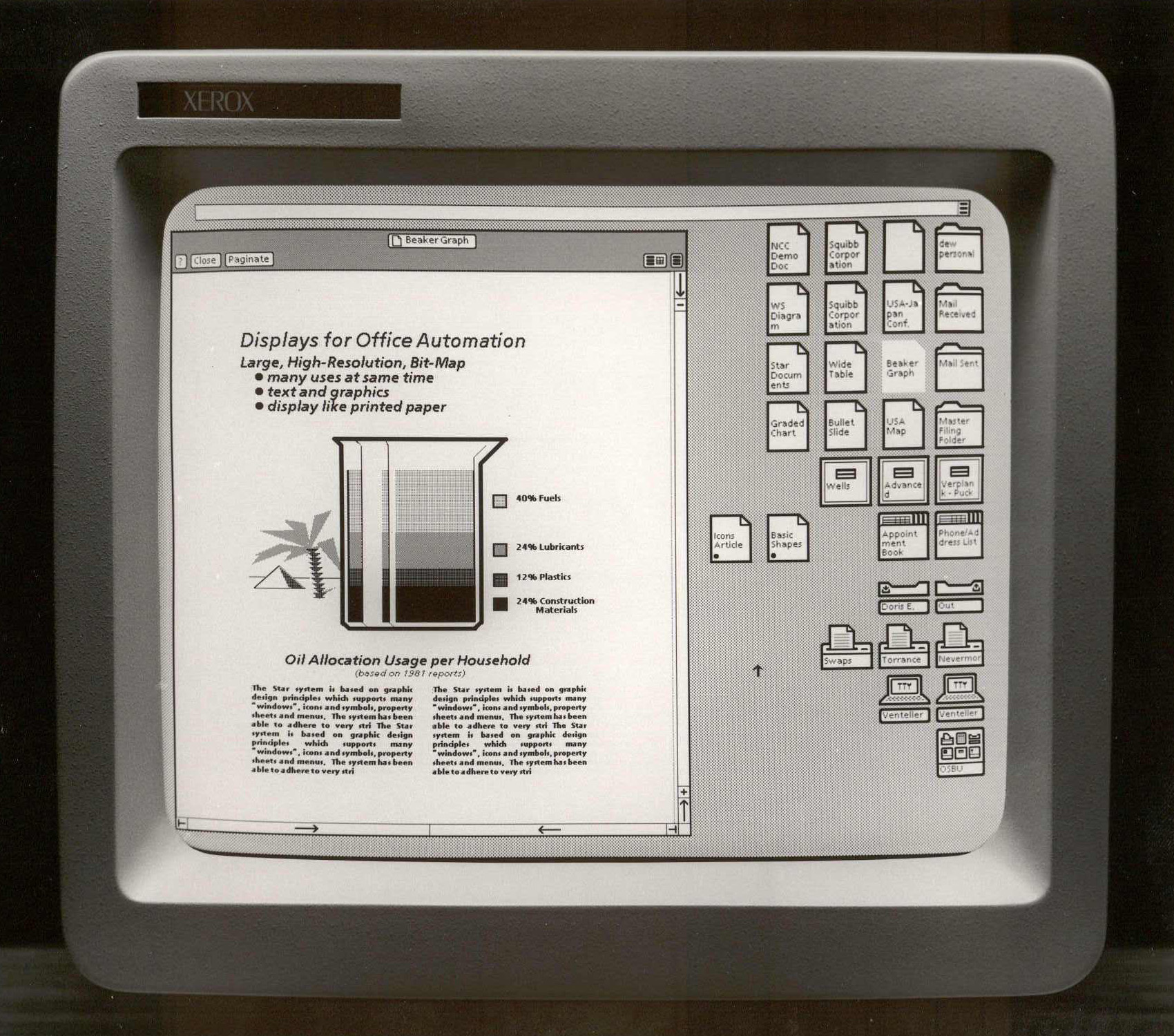

We tend to just accept this, but this way of doing things does not necessarily have to be the case. Back in the seventies, a team at the Xerox Palo Alto Research Center developed something known as Smalltalk – a programming language and integrated development environment which ran on the Xerox Alto personal computer. From The Smalltalk-76 Programming System: Design and Implementation (Ingalls, 1978):

The purpose of the Smalltalk project is to support children of all ages in the world of information. The challenge is to identify and harness metaphors of sufficient simplicity and power to allow a single person to have access to, and creative control over, information which ranges from numbers and text through sounds and images.

The Smalltalk development environment was itself written in Smalltalk, and as every line of code was interpreted at runtime, the system used to edit the code could also be used to edit itself. Dan Ingalls, creator of the Smalltalk virtual machine, gives a demonstration here:

The tradition of all the Smalltalk systems had been that they are basically live coding. So, all the time that you’re working there, the system is live and changeable – you can of course change your programs, but you can also change the system itself. And everything that’s a part of the system running is accessible to change.

For example, if the user wanted to change the way a window looked – this was as simple as finding the code responsible for rendering the window and changing it in place. There’s a well-known story about this, about when Steve Jobs and a team from Apple visited the Palo Alto Research Center in 1979. From The Early History of Smalltalk (Kay, 1993):

One of the best parts of the demo was when Steve Jobs said he didn’t like the blt-style scrolling we were using and asked if we could do it in a smooth continuous style. In less than a minute Dan found the methods involved, made the (relatively major) changes and scrolling was now continuous! This shocked the visitors, especially the programmers among them, as they had never seen a really powerful incremental system before.

In principle, this is incredibly important. At the extrema, this is what would allow a user complete representational freedom – control over the interface metaphors by which they interact with their data, the intermediary format between brain and computer.

So far as interface metaphors go, another significant innovation which came out of the Palo Alto Research Center in the seventies was the windows, icons, menus, pointer interaction paradigm – also known as WIMP. This was developed for Xerox personal computers, and laid down the foundations for how our graphical user interfaces work today.

What was novel about this was how it used visual metaphor to represent abstract concepts – for instance, files and folders could be represented using little icons that looked like pieces of paper. If you’re reading this on a desktop computer, take a look outside the page at the various buttons and scroll bars and so forth – much of this was first designed around this time period.

And it hasn’t changed much. You see, when Steve Jobs incorporated this style of user interface into the early Macintosh operating system, Apple lacked the resources to also build a live coding system like Smalltalk with which users could modify their operating systems. I don’t really blame them – an interpreted language like Smalltalk would have run extremely slowly on the consumer-grade hardware of the day – but the consequence of this is that our expectations of how flexible our operating systems should be have been essentially frozen in time.

Our hardware has only become cheaper and faster over the years, but we are still doing things the same way. I was optimistic at the time that this might change once multi-touch interfaces became ubiquitous, but our computers have only become more restrictive. Have you tried coding on an iPad? These devices do not even ship with any kind of code editor, let alone one that’s actually suited to a touchscreen device. Then, even if you do install a nice text editor – good luck actually shipping an iPad app without resorting to using a desktop computer.

So – anyone who owns a pair of high quality in-ear monitors will already know that our current technology has complete dominion over the auditory field; and as virtual reality headsets improve in quality we will soon achieve a similar level of control over the visual field. What happens once our brain-computer interface hardware has complete control over a user’s entire qualia state?

I feel like we are headed for one of two scenarios. Either our brain-computer interface paradigms get dictated by a major player like Apple who continues to repeat the patterns of the past; or they get designed by a new player like Neuralink who might be strong on hardware while lacking original vision for how software should work.

Will we get things right this time around, and hand control back to the users? What happens if we fail to develop self-modifying operating systems before this mythical future qualiatech arrives? To screw this up would be trancendental cringe indeed.

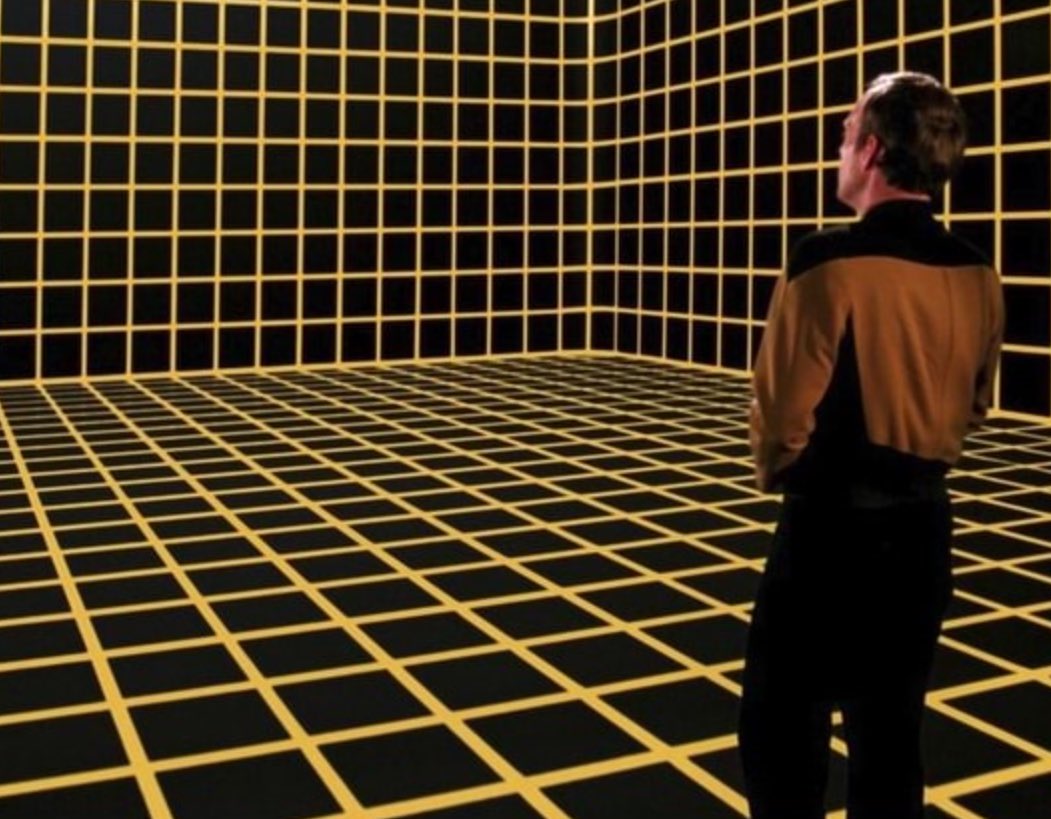

Qualia computing

Let us step now into a hypothetical – I’d like to imagine we now possess an idealised, qualia-complete brain-computer interface, one with direct read-write access to the phenomenal fields. This is a system which can induce any visual or somatic percept the user might conceivably desire.

What could we do with such a system? I discussed this with Louis Arge, and he had this to say:

Brain-computer interfaces open a use case of directly rendering high valence, and I’d suspect that’d be my primary use case, especially in a post-economic world – but I don’t think I’m particularly curious about experience. I wonder if I’d render complex art pieces or stories, if I could just render love or beauty or equanimity.

I quite enjoyed this conversation, and I suspect that I somewhat sympathise with this point of view; once you have this degree of control you could quite readily skip all the way to some quite hedonic states. I’d also like to acknowledge that there’s probably a few readers who would find this to be scarily close to wireheading.

My personal stance is that I think sufficiently advanced user interface design is equivalent to solving coherent extrapolated volition – which converges upon valence gradient descent at the limit. But this is a philosophical and ethical tangent that I don’t really want to go down, because I feel like all the way between here and there there is a large number of really interesting interface design problems to be explored.

The visual field

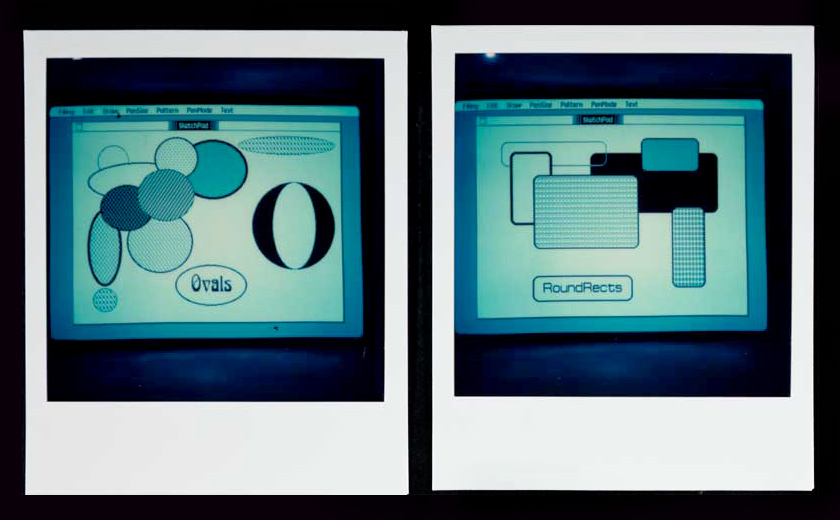

As a software developer, I’ve spent a lot of time using computer graphics libraries. At the lowest level, these libraries provide simple function calls for rendering two-dimensional geometric primitives – lines, rectangles, ellipses, Bézier curves, text, and so on – on a pixel grid. These are the low-level procedures from which more complex user interface elements are built.

I’m not afraid to say that the times I have used DMT have taught me many lessons about the human visual system, and this in turn has informed the way I think about interface design. I think it’s quite reasonable to use psychedelic experiences to help us understand the full design space for synthetic subjective experiences.

DMT is known to generate visual hallucinations that are crispy, geometric, hi-res. I know the human brain is capable of drawing sharp, accurate geometric primitives and user interface elements, because I’ve seen it do it before. Personally, when I trip, I often see text rendered in specific fonts – perhaps Times New Roman or Adobe Myriad Pro, or even quirkier ones like Bauhaus 93. I’ve encountered DMT realms which were clearly built from the same components as one would find in the macOS user interface and graphics libraries:

I guess geometric primitives are geometric primitives no matter what one’s rendering environment is. These days, I find myself wondering: what are the principles by which a visual field graphics library might operate? Grossberg’s filling in? Lehar’s flame fronts? Then, once these techniques are mastered, what user interface systems might we build with them?

There’s a catch. If you’ve embraced nonduality, you may have come to realise that you are not separate from your phenomenal fields – you are your phenomenal fields. With this in mind, I ask the reader – do you really want to be a UIButton?

I feel that there’s much more imaginative things we could do instead, and I don’t just mean better interface metaphors. Perhaps we’ll even figure out a neurostimulation protocol which can generate hyperbolic or higher-dimensional experiences, and employ those for their computational properties. I’ll quote from Ada Palmer’s science fiction novel Too Like the Lightning, a scene in which the protagonist meets someone trained since childhood to handle such representations:

Carlyle smiled. “I presume it’s also propaganda that you never saw the sun?”

<true, actually. well, training is totally different for different kinds of set-sets, but for a cartesian it’s true. i first saw it when i was 17. it was smaller than i thought, and glarier. but now i can go watch a sunset anytime, i just don’t want to, it’s boring, so slow, monosensory. and before you fuss, i may have grown up never seeing a sunset, but you’ve never seen a six-dimensional homoskedastic crest up from the data sea, and you never will because you’re wasting those nerves on telling you your knee itches.>

The somatic field

This all said, with complete control over the somatic field, I bet that we could make a button press feel really good. But what else could we do with this?

Again, I’ll draw from my experience with psychedelics, as they have done some fairly strange and curious things to my somatic field over the years. I know DMT can completely dissolve the sense of having a body map – a paper which reviewed ten years’ worth of r/DMT comments found that this is reported in 17.3% of experiences – but this is not the only thing it can do. I’ve felt it tile my skin with oscillating patterns, and even had it frequency shift sensations up a few octaves – resulting in out-of-gamut sensory phenomena like ultraflavour.

Last year, I wrote a whole post about a body map distortion I encountered where my mouth became tessellated across space. At the time, I thought this gestured towards the kind of morphological freedom which future neurotech might enable:

What I experienced by accident was pretty weird, but indicative of the state space we might have to work with. What else might be possible, when there’s a Neuralink implant in every cortex? With the right cortical stimulation, could one grow wings or a tail?

The somatic field is not just a sensory channel; it’s also a channel through which we express volition, with movement – but this does not necessarily have to be coupled to a sense of having a body. The question of what constitutes a tool or an affordance gets interesting when the bodymind itself is malleable – and even the geometry of space itself is a mutable quantity. What could we achieve with a hyperbolic body map, and fractally branching arms and fingertips?

There’s a trip I had once, during which I developed a sense of tension in my temples – like the feeling of tension one gets from a muscular cramp, just not in a place which one would normally associate with having muscles. These patches of tension shifted outside my head and floated in space; I found I could squeeze them just like I would any muscle in my body, and as I did this, they would vibrate – zzzt – and this would cause objects in the visual field to vibrate in turn. This felt like developing psychic powers – for what is telekinetics, but expressing volition at a distance?

The video game designer Tim Rogers is the kind of person I would want to see working on psychic tactile interfaces. He presented a talk about the philosophy behind his virtual sport VIDEOBALL at the Game Developers Conference 2016:

It is the year 2016. We do not yet have a consumer-ready flying car. We do, however, have virtual reality. Our future sports will, therefore, not have cyborgs immune to concussion. We will remove the corporeal from our sports. We will translate athletics unto electronics. We will transform electronics into telekinetics. As I can reckon, we need a perfect sport if we are ever to become a post-war cyberpunk dystopia. This sport will not involve humans. It will not involve death or violence. It will take place inside players’ minds, and it will project directly into spectators’ minds. The sport of the future is an electronic game. The future sports electronic games are playable by humans of any body type, controlled with the mind, and regulated and officiated upon by the most laser-precise electronic intelligences. These sports are, at present, hypothetical.

Volition

When we interact with computers, the potential downside from false positives tends to be much larger than that of false negatives – insofar as our software cannot be designed to be entirely forgiving. At present, we really only interact with computers mechanically through deliberate motor action, so preventing false positives is about filtering those.

For instance, with the advent of touchscreen smartphones, the humble slide to unlock gesture long stood guard against false positive interaction events – and newer interaction modalities such as speech and gesture recognition present their own design challenges.

Except for extreme cases like reflexive flinching I find I don’t have that much trouble inhibiting unintentional motor output – but I have no idea if we’ll be able to leverage the same inhibition machinery if and when we start building brain-computer interfaces. It’s possible that we’ll always need to confirm certain internal actions using external motor action. In William Gibson’s novel The Peripheral, a character’s brain implant is gated by deliberate tongue movement:

It just seemed to happen, as he most liked it to. Lubricated by the excellent whiskey, his tongue found the laminate on his palate of its own accord. An unfamiliar sigil appeared, a sort of impacted spiral, tribal blackwork. Referencing the Gyre, he assumed, which meant the patchers were now being incorporated into whatever the narrative of her current skin would become.

On the third ring, the sigil swallowed everything. He was in a wide, deep, vanishingly high-ceilinged terminal hall, granite and gray.

“Who’s calling, please?” asked a young Englishwoman, unseen.

“Wilf Netherton,” he said, “for Daedra.”

This seems like a crude solution; but it’s possible we may have no other option. While I find it easy to prevent accidental muscular movement, accidental attentional movement is another story.

At present, the mouse and pointer system serves as a sort of dual proxy for both a limb and attention. The user generally keeps the pointer under their fovea – itself a reasonable proxy for visual attention – and a click indicates interaction with the object of attention. More recently, the Apple Vision Pro virtual reality headset has shipped with some incredibly accurate and responsive eye tracking technology. In this case, the user can indicate their object of attention directly; but interaction with the object of attention remains gated by hand gestures. Apple also does not expose an API by which developers might access this high resolution eye tracking data, lest it be abused by entities which feed on human attention.

On the topic of attention, Andrés Gómez Emilsson from the Qualia Research Institute came along to our vibecamp discussion, and he had this to say about attentional dynamics:

Attention does a lot of things – there’s three things maybe worth highlighting. One is like, whatever you pay attention to gets energized. So that’s like a very generic principle.

The second one is that whatever you pay attention to increases the coupling constant. If you pay attention to both of your hands their vibration will synchronize – like increasing the surface area of their interactions. So maybe that can also be captured in the operating system.

And then the third one is, like, attention also has a kind of re-centering effect, which is like – which element of your experience are you going to choose as the center of mass, along which to orient everything else? That also seems like something implicitly present in the operating system.

DMT accelerates normal attentional dynamics, revealing these principles. In a sober state, this process is kept in check, but while on a modest amount of DMT it can feedback recursively. For instance, I’ve used a textured popcorn ceiling as a canvas upon which to sculpt simple volumetric figures – cones, cylinders, cubes rising out of the depth map – just by imagining what they would look like while my attention rested on my visual field. These were sufficiently robust that if I looked away briefly they would still be there when my gaze returned.

I daresay just about anything within imagination could be willed into being by such a process, from basic geometric primitives to entire synthetic realms like the one I described earlier. If readable from the outside, this would be a powerful mechanism for visual communication and freewheeling self-expression. One can imagine how this might run awry if an unpleasant imaginal object were to enter attention – compared to not clicking on something, not thinking of a pink elephant presents greater challenges.

Solving this class of problem might require some as yet undeveloped theory which merges internal alignment with phenomenology. Until then, when we want to communicate with computers in a completely precise way, we use programming languages. In our fanciful deep future scenario, perhaps it might work like this:

“Please write concise, readable code for synchronising the emotional components from one user’s somatic field harmonics with another’s. Prioritize clarity and simplicity. Use descriptive variable names and include brief comments for complex logic. Aim for efficiency without sacrificing understandability.”

Claude perched on the edge of the table, stroking his moustache with one hand and twirling his wand with the other.

“As an AI language model created by Anthropic to be helpful, harmless, and honest, I must ask you what’s up with the retro-style prompt engineering? It’s not the twenties anymore!”

Heather sighed. “I’m sorry, old habits do die hard. I didn’t mean to talk down to you. I trust you know what you are doing.”

Claude made pointed eye contact through horn-rimmed glasses.

“Ahem. As an AI language model created by Anthropic to be helpful, harmless, and honest, I do have concerns about the suffering risks involved with direct somatic field manipulation.”

“Which is why we’re going to audit what you come up with. I would like you to write me a selection of five programs, please, beginning with five different random seeds.”

Five Claudes took five different paces towards the whiteboard. Somewhere along the way, five wands had become five whiteboard markers. They set to work.

Soon, five abstract syntax trees sprawled across the whiteboard, which had grown in size and now distorted the space around it. Claude spun on his heels, stepped back into himselves, and took a small bow.

“As you can see, I’ve taken some creative liberties with my approach to the synchronisation problem. Some solutions prioritise rapid synchronisation and desynchronisation at the risk of creating partially-synchronised chimera states. Others take a gentler approach.”

With a little dramatic flair, Claude tapped the whiteboard with his wand. It began to dissolve, leaving behind a tangle of glowing red ink suspended in space, which morphed into a series of densely packed node-and-wire structures.

Heather contemplated the virtual hedgerow. “You don’t mind if I give these the smell test?”

“How do you mean?”

“I’d like to run a diagnostic that was crafted by a friend.”

The array of syntax trees further rearranged themselves, individual nodes following wayward trajectories as if governed by invisible magnetic forces of attraction or repulsion, some mutually intersecting in a manner reminiscent of higher-dimensional protein folding.

“Okay. Let’s turn up the temperature.”

The molecular clusters all began to vibrate, increasing in pitch until all that could be seen were five variably transparent clouds. Heather approached each in turn, using her hand to gently waft small quantities of vapour towards her nose.

She paused beside the second one. “There’s a lot going on in here. How many libraries did you import?”

“Seven.”

“I won’t be able to audit this by myself.” She moved on down the line. “This one’s unripe. I think it must have some type holes left in.”

She swirled her finger around inside the fourth. “Oh, I recognise this. Grass clippings. You used the Mudita Labs suite?”

“Yes.”

“Great job. Let’s unfurl this one, I’ll conduct a depth-first review.”

The cloud desublimated, while the other four programs dissipated into the breeze.

“Now that’s what I call a Turin-complete computing system!” Claude remarked.

“What?”

“Ah – Turin-complete computing refers to Luca Turin, a biophysicist who developed the vibration theory of olfaction. This theory suggests that the smell of a molecule is based on its vibrational—”

“I know. Can we drop the puns, please.”

Heather and Claude worked together, walking the syntax tree from root to branch, discussing conditionals and error handling at length as they encountered them. Occasionally she would pull out a pair of secateurs to prune leaf nodes and graft on new structures.

“I think this one’s safe to go.”

“Define safe.”

Heather lifted up a declaration, the program dangling from her fingertips. “This function is responsible for predicting phase coherence. We have no analytical solutions, and phase decoherence can happen abruptly; there’s no getting around that. I have written a catch that will induce a cessation state in the event that estimated somatic field valence dips too rapidly.”

“Reboot the system, got it.”

“I’ve identified an optimal beta tester based on an inverse kinematic vibe compatibility score. How about we get her on the phone?”

If morphological freedom is freedom of bodily schema, then representational freedom is freedom of mental schema. I hope this story illustrates what idealised representational freedom might look like.

Without user-modifiable programming environments, we won’t get diversity of programming interfaces. We definitely don’t get to use our olfactory lobes to detect code smell. At first impression, this might seem like a flippant example – but I think that olfactory perception is a reasonable sensory channel for representing extremely high-dimensional information while also leveraging autobiographical memory.

The rationale for routing information through a human mind at all is that there’s a computational benefit to doing so. Humans have some remarkable cognitive capabilities – long term memory, pattern matching, symmetry finding, shape rotation. If we cannot optimise our representations so as to make use of these, then we’ll get outflanked by artificial intelligence.

This seems to be the kind of thing that keeps Elon Musk up at night. Nolan Arbaugh is the first patient to receive a Neuralink implant, allowing him to move a mouse cursor with his mind. In the most recent Neuralink update, Elon boasted of Nolan’s exceptional performance on a simple clicking game designed to measure bandwidth in bits per second:

It’s a very simple game, you just have to click on the square. Anyone who wants to try this, I recommend going to the neuralink.com website and seeing if you can beat Nolan’s record. You’ll find that it’s quite difficult to do so – and this is with version one of the device, and only with a small percentage of the electrodes working.

So this is really just the beginning, but even the beginning is twice as good as the world record. This is quite important to emphasize.

It’s only gonna get better from here. So the potential is to ultimately get to megabit level. So that’s – that’s part of the long term goal of improving the bandwidth of the brain-computer interface. If you think about how low the bandwidth normally is between a human and a device, the average bandwidth is extremely low, it’s less than one bit per second over the course of a day. If there are 86,400 seconds in a day, you’re outputting less than that number of bits to any given device.

This is actually quite important for human-AI symbiosis – just being able to communicate at a speed the AI can follow.

I hope he succeeds. If I am to witness the dawn of artificial intelligence within my lifetime – by then, I hope to have found higher bandwidth ways of posting about it than pushing buttons with my monkey fingers.

Commentary

I feel like I’m writing about problems that we’re not going to encounter for quite some time. Additionally, I suspect that the idea that we might ever achieve such precise control over our subjective experience might seem totally implausible.

Selen Atasoy’s connectome-specific harmonic wave theory is the reason I don’t think this is overly optimistic. In brief, this is the idea that the current state of somebody’s brain waves can be decomposed into a weighted sum of connectome harmonics – similar to taking a Fourier transform. This would be the neural correlate of someone’s vibes – from high level emotional tone all the way down to the most fine-grained visual and somatic phenomena.

I’m not sure how widely accepted this model might be, but rational psychonaut types like myself tend to be sympathetic to this idea given the sheer prevalence of resonance phenomena we observe – Steven Lehar developed what he calls harmonic resonance theory to explain his intuitions around this. Perhaps connectome harmonics will turn out to be the intermediary representation required to facilitate brain-to-computer or brain-to-brain transmission of internal representations.

There’s a noninvasive neuromodulation technique on my radar at the moment called transcranial focused ultrasound. The risks involved are still up for debate – but early trials are showing some promising results for reducing connectivity in the default mode network, the functional network associated with a sense of self.

Focusing acoustic waves through the skull and onto specific brain regions presents significant technical challenges, but the technology is simple enough in principle that a small number of pioneers are building their own devices out of off-the-shelf ultrasonic transducers. Extremely speculatively, perhaps one of them will figure out how to upregulate or downregulate individual connectome harmonics, and verify connectome-specific harmonic wave theory? I look forward to the opportunity to try it out for myself.